Artificial Intelligence (AI) and Large Language Models (LLMs)

Anthropic has devised something they call “AI Fluency”—a way to approach and work with AI that outlives evanescent tips and tricks that quickly become outdated. The proposed “4D framework” for AI Fluency rests on these four core competencies:

- Delegation: Deciding what work to do with AI vs. yourself

- Description: Communicating effectively (e.g., context/prompt engineering)

- Discernment: Evaluating AI output/behavior

- Diligence: Ensuring responsible AI interaction (accuracy, safety, security, accountability)

Delegation

The clarity to decide what to collaborate on with AI,

what to do yourself,

and the wisdom to know the difference.

- Problem Awareness: Clearly define goals and the work needed, before involving AI tools

- Platform Awareness: Knowing through hands-on experience, what different AI systems can and can’t do (capabilities and limitations).

- Task Delegation: Strategically dividing work between you and AI

- Automation: AI executes specific tasks based on your instructions

- Augmentation: You and AI collaborate as creative thinking and task execution partners

- Agency: You configure AI to work independently on your behalf, establishing its knowledge and behavior patterns rather than just giving it specific tasks

What could be usefully automated? Where would augmentation create more value than working separately? What should be done by a human alone? What could be done by an agent on your behalf?

“I’m preparing how to [insert task] and want to discuss with you what a delegation plan may look like for figuring out which parts I should delegate to an AI like you vs. not. Can you help me with this?”

Agency

Agency doesn’t mean uncontrolled action or just “yolo”’ing it. It means intelligent agency with clear checkpoints. Human oversight for critical decisions while delegating smaller, time-consuming decisions. It means taking a complex task and seeing it through to completion.

When to consider using AI agents

Is the task complex enough?

no -> workflows

yes -> agents

Is the task valuable enough?

<$0.1 -> workflows

>$1 -> agents

Are all parts of the task doable?

no -> reduce scope

yes -> agents

What is the cost of error/error discovery?

high -> read-only/human-in-the-loop

low -> agents

Description

The art of communicating your needs to AI

There is much similarity between describing a task to an AI and describing it to a human.

- Product Description: What you want, the end result

- Output, format, audience, style, etc

- Process Description: The way to success, the thought process

- Specific approaches, tools, methods, data, preferred order, etc

- Performance Description: AI general behavior

- Role and tone; concise or detailed; challenging or supportive?

Prompt Engineering

Prompt engineering can be thought of as programming in natural language.

Before moving on to more advanced techniques such as RAG and fine-tuning, try prompt engineering first.

As a first step, ask the AI itself to help improve your prompt; successful prompting is iterative.

A good prompt is structured using <XML_tags> and {{VARIABLES}} and consists of the following:

1Role: {{ROLE}}

Tone: {{TONE}}

Style: {{STYLE}}

Task: {{TASK}}

<request>

{{REQUEST}}

</request>

2<context>

{{CONTEXT}}

</context>

3<example>

{{EXAMPLE}}

</example>

4<constraints>

{{CONSTRAINTS}}

</constraints>

5<steps>

Let's think step-by-step.

1. Output an overview of every dimension of my request.

2. Find points of uncertainty.

3. Ask as many clarifying questions as needed

</steps>

6Before answering, please think through this problem carefully.

Consider the different factors involved, potential constraints,

and various approaches before recommending the best solution.

If you don't know, say "I don't know".

Answer only if you're very confident in your response.

When making a factual claim, be sure to refer back to the source.

7Compose three different answers, and then recommend one of them.

8{{REPEAT}}- 1

- Role or tone: Specify how you want the AI to communicate

- 2

- Context: Be specific about what you want, why you want it, and the relevant background

- 3

- Example(s): Demonstrate the output style or format you’re looking for. Called “n-shot” prompting, where “n” is the number of examples given.

- 4

- Constraint(s): Clearly define format, length, and other output requirements

- 5

- Steps: Guide the AI through multi-step reasoning, forcing the model to say things out loud. Called “Chain of thought” (CoT) prompting.

- 6

- Asking the AI to think first. Give space for the AI to work through its process.

- 7

- Ask for multiple answers. This is especially helpful for boosting creativity.

- 8

- Repeat any important instructions

For more helpful tips and examples, see:

- Anthropic’s Prompt Engineering Overview

- OpenAI’s OpenAI Cookbook

- Google’s Prompt Design Strategies

Fine-tuning

Fine-tuning updates the LLM itself, essentially resulting in a new model.

A good use is for behavior alignment (reasoning format, style, classification logic) or domains with static knowledge.

Retrieval-augmented Generation (RAG)

RAG keeps the LLM as-is and adds a static library.

A good use for RAG is as knowledge augmentation where new knowledge is added often, and the latest, accurate information retrieval is crucial.

Model context protocol (MCP)

MCP, just like RAG, keeps the LLM as-is, but adds a dynamic library. It’s a protocol for LLMs to communicate with external services and tools.

Discernment

The art of evaluating how well your needs were met by AI

- Product Discernment: Evaluating the quality of AI outputs

- Accuracy, appropriateness, coherence, relevance

- Process Discernment: Evaluating how the AI approached the task

- Logical errors, attention gaps, circular reasoning

- Performance Discernment: Evaluating how the AI is communicating with you

- Is the communication style effective?

When discernment flags a problem, a better description is often the solution, and sometimes the delegation needs adjustment.

Giving feedback to the AI

- Specifying the problem

- Clearly explaining why it is a problem

- Providing concrete suggestions for improvement

- Revising your instructions or examples

Agent self-discernment

When applying AI agency (or “vibe coding”), an important piece is how the AI evaluates its own work.

Test-driven development (writing tests first) has emerged as a state-of-the-art technique for agentic coding. Having the AI begin with writing and reviewing tests before moving on to implementation is a huge improvement in the efficiency and effectiveness of AI agents.

However, it’s easy to have tests written that are too specific to the current feature. Having tests written as minimalist, general, and end-to-end helps.

Writing acceptance tests, using the end product, and spot-checking key facts you understand are all good ways to practice discernment on AI agent output.

Diligence

Ethical and safety aspects for responsible AI collaboration.

- Creation Diligence: Being thoughtful about which AI systems you choose and how you work with them

- Transparency Diligence: Being open about AI’s role in your work

- Who needs to know?

- How (and when) should I communicate this?

- What level of detail is needed?

- Deployment Diligence: Taking ownership of AI-assisted outputs you share with others

- Verify facts

- Check for biases

- Ensure accuracy

The Lethal Trifecta

The lethal trifecta1 of capabilities is:

- Access to your private data—one of the most common purposes of tools in the first place!

- Exposure to untrusted content—any mechanism by which text (or images) controlled by a malicious attacker could become available to your LLM

- The ability to externally communicate in a way that could be used to steal your data (Simon Willison calls this “exfiltration”)

If your agent combines these three features, an attacker can easily trick it into accessing your private data and sending it to that attacker.

Tools

Coding agents

Coding agents are AI products like Anthropic’s Claude Code and OpenAI’s Codex. Some tips that I’ve found helpful when working with these tools are:

New problem = new chat; clear the context and start fresh.

- As with “normal” AI, be specific when prompting. Some helpful prompts include:

- Start with brainstorming/planning.

- Document the plan in a markdown document.

- Ultrathink

- Spin up as many parallel subagents as you find beneficial.

- “Do not generate code until you are fully ready”

- Stop if you find anything unexpected.

- Keep a

COMMON_MISTAKES.mdand reference it every time. - Track all work in git.

- Commit often, with small and focused commits

- This goes hand-in-hand with breaking down your work into small pieces.

- Always write a test so that the AI can iterate (called test-driven development)

- ~3 e2e tests per feature are enough

- Do not enable MCPs by default. Only enable them when you know you’ll need them, then disable them. This is because MCPs eat up your context window.

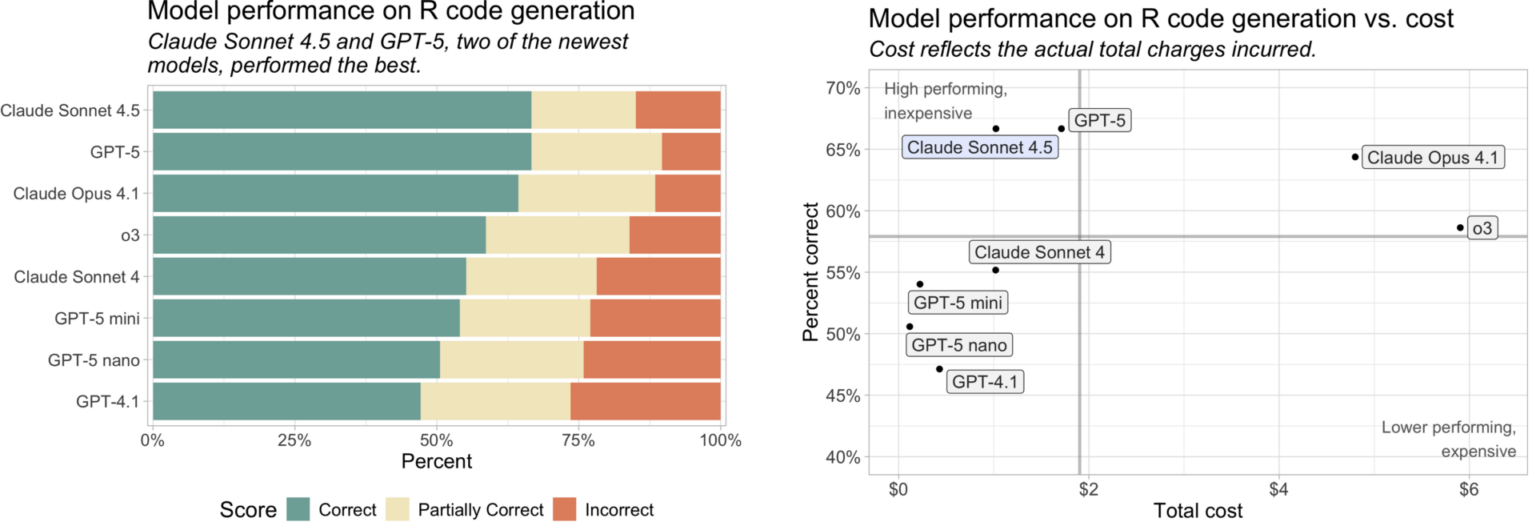

Models

R packages

- mcptools

- btw

- ellmer

- chores

- gander

- mall

References

- Anthropic

- Simon P. Couch

- Sara Altman